Elliot EpsteinI am a final-year PhD student at Stanford in the Institute for Computational and Mathematical Engineering. My work focuses on efficient and interpretable machine learning methods for time series and sequence modeling tasks where classical statistical techniques and standard architectures such as Transformers often fail or scale poorly. These include problems with large cross-sectional dimension and tasks involving very long sequences. In the Summer of 2025, I was a Quant Research Intern at Jump Trading. I have spent previous summers at Google, most recently on the Gemini team, working on automated evaluation of instruction following in LLMs. Before Stanford, I completed an MS in Mathematical and Computational Finance at Oxford, along with a quant internship at a commodity trading firm. epsteine@stanford.edu / CV / GitHub / Google Scholar / LinkedIn |

|

Research

|

|

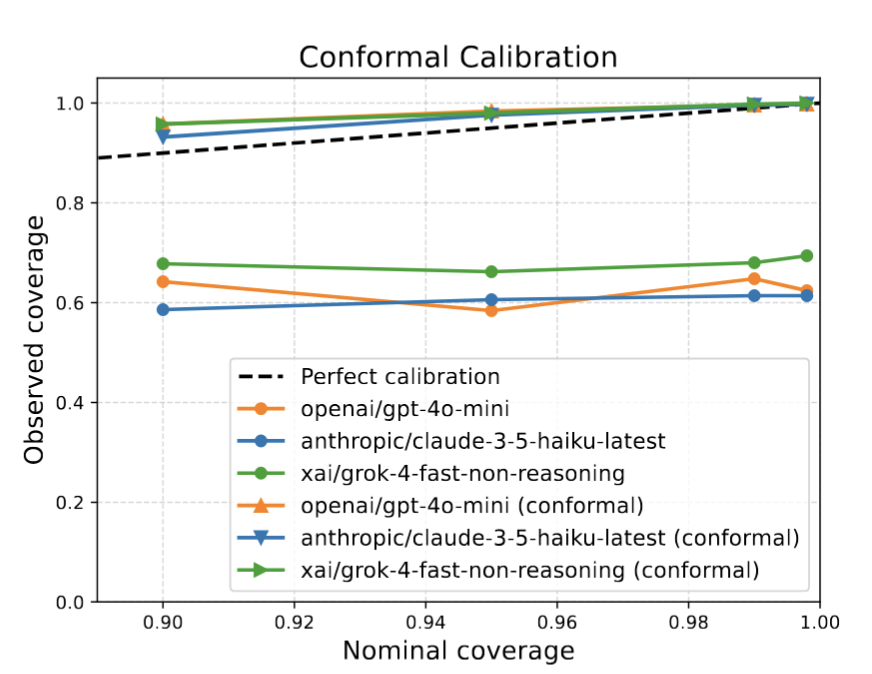

LLMs are Overconfident: Evaluating Confidence Interval Calibration with FermiEvalElliot L. Epstein, John Winnicki, Thanawat Sornwanee, Rajat Vadiraj Dwaraknath AIR-FM@AAAI (Best Paper Award), 2026 arxiv / slides / |

|

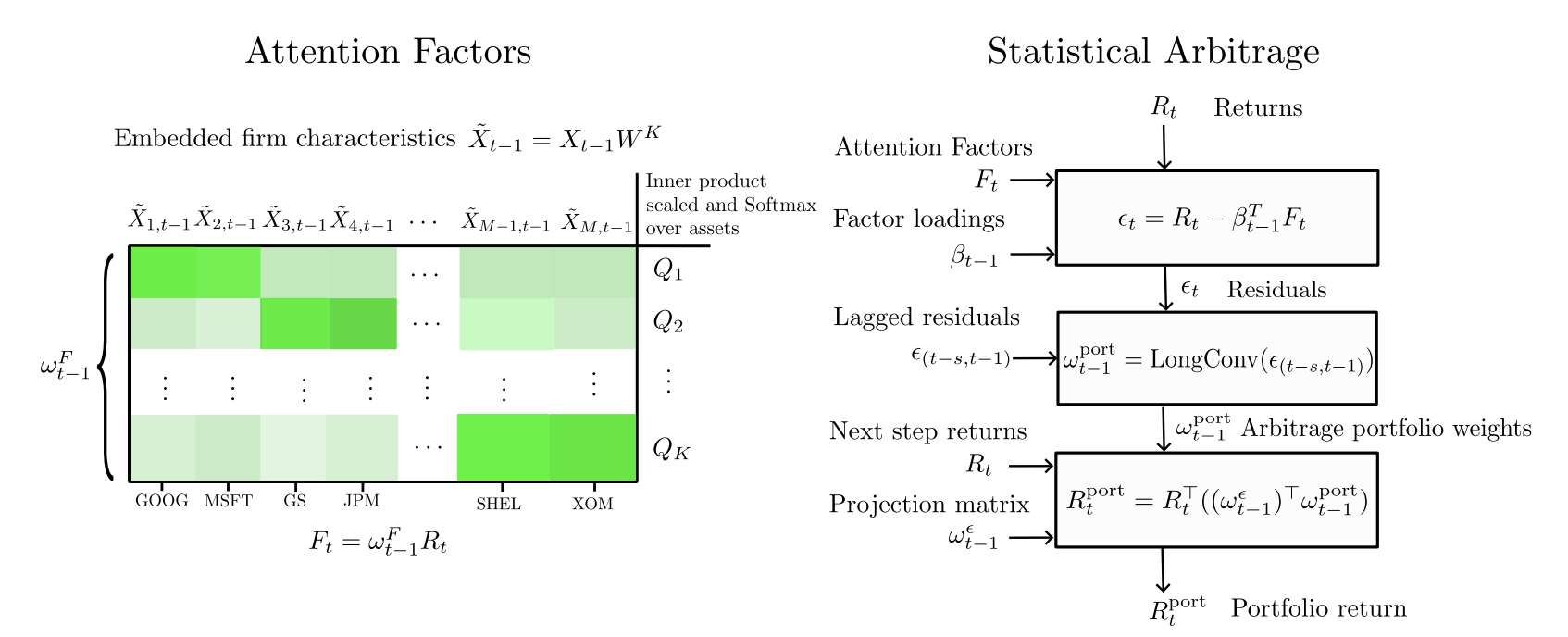

Attention Factors for Statistical ArbitrageElliot L. Epstein, Jaewon Choi, Rose Wang, Markus Pelger International Conference on AI in Finance (Oral Presentation), 2025 arxiv / slides / |

|

A Set-Sequence Model for Time SeriesElliot L. Epstein, Apaar Sadhwani, Kay Giesecke FinAI@ICLR, 2025 arxiv / |

|

Score-Debiased Kernel Density EstimationElliot L. Epstein*, Rajat Vadiraj Dwaraknath*, Thanawat Sornwanee*, John Winnicki*, Jerry Weihong Liu* NeurIPS, 2025 arxiv / slides / |

|

MMMT-IF: A Challenging Multimodal Multi-Turn Instruction Following BenchmarkElliot L. Epstein, Kaisheng Yao, Jing Li, Shoshana Bai, Hamid Palangi SFLLM@NeurIPS, 2024 arxiv / Research done during my 2024 internship on the Gemini Team at Google. |

|

Simple Hardware-Efficient Long Convolutions for Sequence ModelingElliot L. Epstein*, Dan Y. Fu*, Eric Nguyen, Armin W. Thomas, Michael Zhang, Tri Dao, Atri Rudra, Christopher Ré ICML, 2023 arxiv / code / blog post / What is the simplest architecture you can use to get good performance on sequence modeling with subquadratic compute scaling in the sequence length? State space models (SSMs) have high performance on long sequence modeling but require sophisticated initialization techniques and specialized implementations for high quality and runtime performance. This research studies whether directly learning long convolutions over the sequence can match SSMs in performance and efficiency. |

|

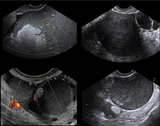

Ultrasound image analysis using deep neural networks for discriminating between benign and malignant ovarian tumors: comparison with expert subjective assessmentF Christiansen, Elliot L. Epstein, E Smedberg, Mans Akerlund, Kevin Smith, E Epstein Ultrasound In Obstetrics & Gynaecology, 2021 arxiv / This research develops a method to discriminate benign from malignant ovarian tumors based on transfer learning from a pretrained model on ImageNet. The model achieves an accuracy comparable to a human expert. |

Internships |

|

Quantitative Research InternJump Trading Jun. 2025 — Aug. 2025 |

|

PhD Software Engineering InternJun. 2024 — Sep. 2024 Intern in the Gemini Team. Outcome: Research paper “MMMT-IF: A Challenging Multimodal Multi-Turn Instruction Following Benchmark” Student ResearcherOct. 2023 — Jan. 2024 Worked on an LLM based dialogue system. Software Engineering InternJun. 2023 — Sep. 2023 Worked on an LLM based dialogue system. |

|

Intern, Quant and Data GroupEDF Trading Apr. 2021 — Aug. 2021

|

Education

|

|

Stanford UniversityPh.D. in Computational and Mathematical Engineering Stanford, United States 2021 — Present GPA : 4.10/4.3

|

|

University of OxfordMS in Mathematical and Computational Finance Oxford, United Kingdom 2020 — 2021 |

|

ETH ZurichExchange Student, Department of Mathematics Zurich, Switzerland 2019 — 2020 |

|

KTH Royal Institute of TechnologyBS in Engineering Physics Stockholm, Sweden 2017 — 2020 GPA : 4.94/5.00 |

Teaching

|

|

Graduate Teaching AssistantshipsStanford, United States

|

BlogShort articles on various topics. |

|

Blog PostsIronman Italy 2024 - Race Report |

|

Design and source code from Leonid Keselman's website |