Elliot EpsteinI am an incoming fifth year PhD student at Stanford in the Institute for Computational and Mathematical Engineering. My work focuses on efficient and interpretable machine learning methods for time series and sequence modeling tasks where classical statistical techniques and standard architectures such as Transformers often fail or scale poorly. These include problems with large cross-sectional dimension and tasks involving very long sequences. Currently, I am a Quant Research Intern at Jump Trading. I have spent previous summers at Google, most recently on the Gemini team, working on automated evaluation of instruction following in LLMs. Before Stanford, I completed an MS in Mathematical and Computational Finance at Oxford, along with a quant internship at a commodity trading firm. epsteine@stanford.edu / CV / GitHub / Google Scholar / LinkedIn |

|

Research

|

|

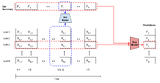

A Set-Sequence Model for Time SeriesElliot L. Epstein, Apaar Sadhwani, Kay Giesecke FinAI@ICLR, 2025 arxiv / |

|

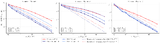

Score-Debiased Kernel Density EstimationElliot L. Epstein*, Rajat Vadiraj Dwaraknath*, Thanawat Sornwanee*, John Winnicki, Jerry Weihong Liu FPI@ICLR, 2025 arxiv / |

|

MMMT-IF: A Challenging Multimodal Multi-Turn Instruction Following BenchmarkElliot L. Epstein, Kaisheng Yao, Jing Li, Shoshana Bai, Hamid Palangi SFLLM@NeurIPS, 2024 arxiv / Research done during my 2024 internship on the Gemini Team at Google. |

|

Simple Hardware-Efficient Long Convolutions for Sequence ModelingElliot L. Epstein*, Dan Y. Fu*, Eric Nguyen, Armin W. Thomas, Michael Zhang, Tri Dao, Atri Rudra, Christopher Ré ICML, 2023 arxiv / code / blog post / What is the simplest architecture you can use to get good performance on sequence modeling with subquadratic compute scaling in the sequence length? State space models (SSMs) have high performance on long sequence modeling but require sophisticated initialization techniques and specialized implementations for high quality and runtime performance. This research studies whether directly learning long convolutions over the sequence can match SSMs in performance and efficiency. |

|

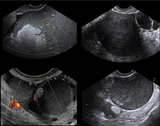

Ultrasound image analysis using deep neural networks for discriminating between benign and malignant ovarian tumors: comparison with expert subjective assessmentF Christiansen, Elliot L. Epstein, E Smedberg, Mans Akerlund, Kevin Smith, E Epstein Ultrasound In Obstetrics & Gynaecology, 2021 arxiv / This research develops a method to discriminate benign from malignant ovarian tumors based on transfer learning from a pretrained model on ImageNet. The model achieves an accuracy comparable to a human expert. |

Internships |

|

Quantitative Research InternJump Trading Jun. 2025 — Aug. 2025 |

|

PhD Software Engineering InternJun. 2024 — Sep. 2024 Intern in the Gemini Team. Outcome: Research paper “MMMT-IF: A Challenging Multimodal Multi-Turn Instruction Following Benchmark” Student ResearcherOct. 2023 — Jan. 2024 Worked on an LLM based dialogue system. Software Engineering InternJun. 2023 — Sep. 2023 Worked on an LLM based dialogue system. |

|

Intern, Quant and Data GroupEDF Trading Apr. 2021 — Aug. 2021

|

ProjectsThese include coursework, side projects and unpublished research work. |

|

Robust Domain Adaptation by Adversarial Training and ClassificationStanford CS224N: Natural Language Processing Mar. 2022 This project extended a method to train a question answering model on out of distribution data by new data augmentation techniques and added a classifier module to determine if a question was answerable or not. Work done in collaboration with Nicolas Ågnes. |

|

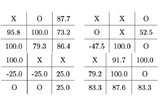

Value Iteration for Markov Decision ProcessesStanford CME 307: Optimization Mar. 2022 This project formulated an MDP problem as a linear program, a contraction result was proved. Extensions of value and policy iteration were implemented and the convergence rate was analyzed, and the findings were empirically verified on a simple tic-tac-toe implementation. |

|

Multi-Fidelity Hamiltonian Monte CarloStanford Aug. 2020 poster / Hamiltonian Monte Carlo improves upon standard MCMC when gradients of the probability distribution are available. However, for settings where the gradients are not available, such as for inverse modeling from physical simulations, these methods are not available. This research shows the efficiency of a new algorithm, Multi-Fidelity Hamiltonian Monte Carlo, based on a Neural Network surrogate model for the gradient. Work done as a research assistant with Eric Darve. |

|

A review of the Article Gradient Descent Provably Optimizes over-parameterized neural networksETH Zurich Aug. 2020 paper / This work theoretically studied convergence for a shallow neural network when trained with gradient descent. By using a gradient flow argument, the dynamics of the predictions were directly analyzed rather than the weights. Convergence was proved under the condition that the neural network is polynomially over-parameterized and the least eigenvalue of a data dependent matrix is positive. Work supervised by Arnulf Jentzen as part of my bachelor thesis at ETH Zurich. |

|

Image Semantic Segmentation based on Deep LearningZhejiang University Aug. 2019 Using Tensorflow, the MASK-RCNN model is trained and evaluated on a new hand curated dataset. Work done with Filip Christiansen as part of a research visit at Zhejiang University in Hangzhou, China. |

Education

|

|

Stanford UniversityPh.D. in Computational and Mathematical Engineering Stanford, United States 2021 — Present GPA : 4.16/4.3

|

|

University of OxfordMS in Mathematical and Computational Finance Oxford, United Kingdom 2020 — 2021 |

|

ETH ZurichExchange Student, Department of Mathematics Zurich, Switzerland 2019 — 2020 |

|

KTH Royal Institute of TechnologyBS in Engineering Physics Stockholm, Sweden 2017 — 2020 GPA : 4.94/5.00 |

Teaching

|

|

Graduate Teaching AssistantshipsStanford, United States

|

BlogShort articles on various topics. |

|

Blog PostsIronman Italy 2024 - Race Report |

|

Design and source code from Leonid Keselman's website |